Publications

Publications by categories in reverse chronological order (* = equal contributions).

2025

- ICML CodeML

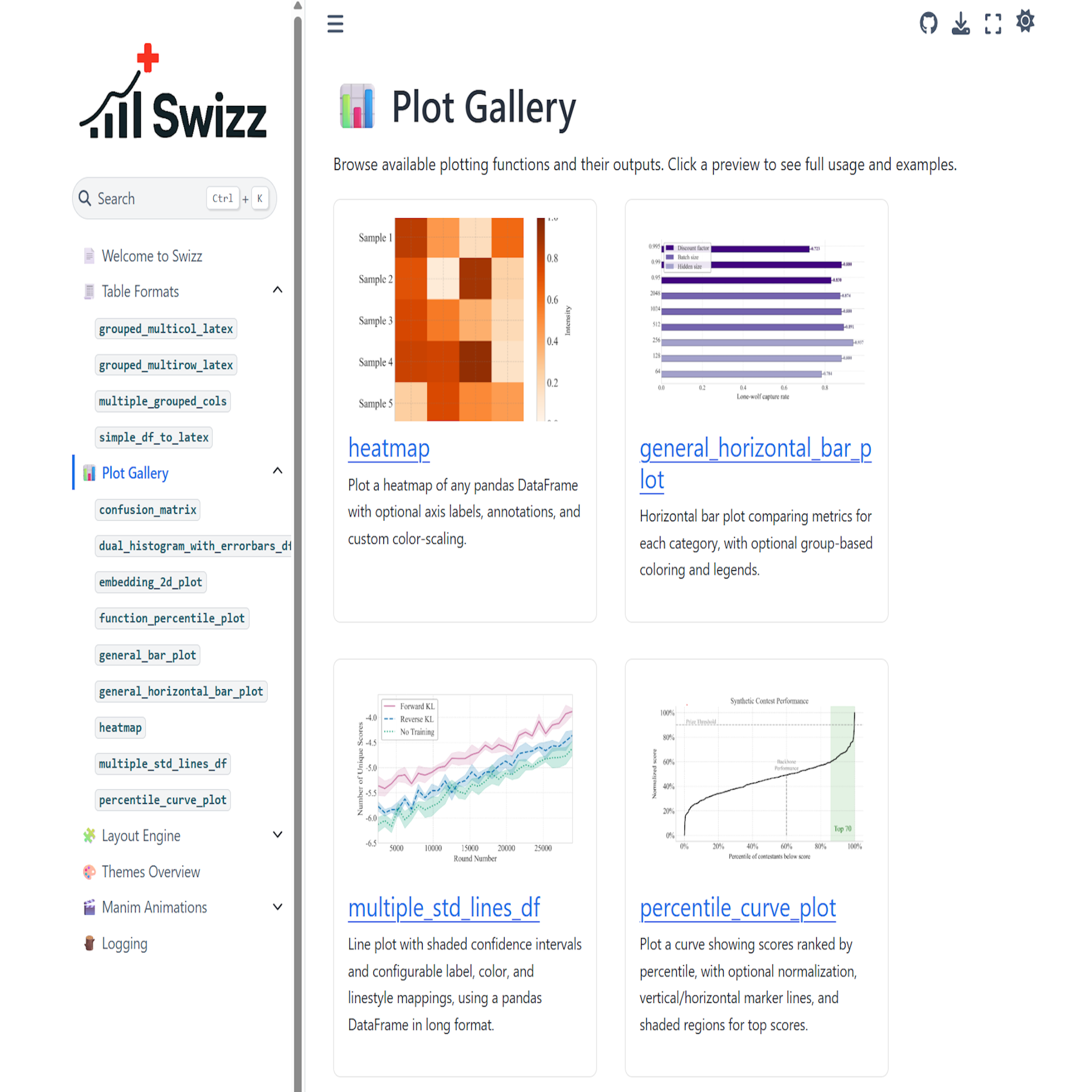

Workshop Swizz: One-Liner Figures, LaTeX Tables, and Flexible Layouts for Scientific PapersLars Quaedvlieg*, Andrea Miele*, and Caglar GulcehreInternational Conference on Machine Learning (ICML) CodeML Workshop, Jun 2025

Swizz: One-Liner Figures, LaTeX Tables, and Flexible Layouts for Scientific PapersLars Quaedvlieg*, Andrea Miele*, and Caglar GulcehreInternational Conference on Machine Learning (ICML) CodeML Workshop, Jun 2025Producing publication-quality visualizations and tables for machine learning papers is often tedious, time-consuming, and prone to inconsistencies. We introduce Swizz, a lightweight Python library designed specifically for researchers to effortlessly generate elegant figures, LaTeX-ready tables, and customizable figure layouts with minimal code. Swizz enables one-line creation of consistent, conference-ready visualizations, including advanced plots and multilevel tables, and provides intuitive, composable layouts to simplify complex figure arrangements. Its automated styling and built-in visual gallery facilitate rapid experimentation, allowing researchers to focus more on research and less on formatting. Swizz is publicly available, easy to integrate into existing workflows, and is themed for major machine learning publication venues. Swizz is open source (MIT) and is available on GitHub and PyPI.

-

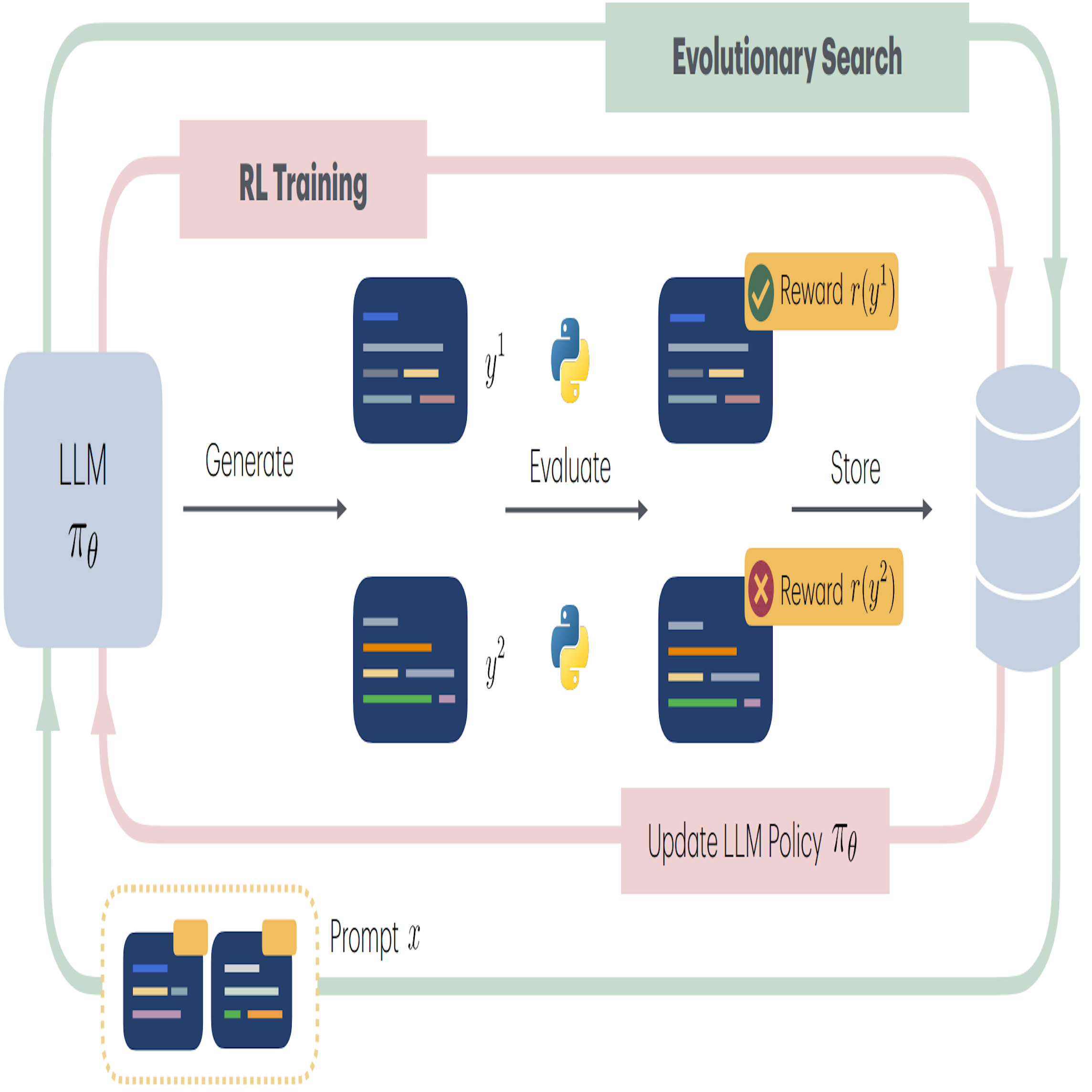

Algorithm Discovery With LLMs: Evolutionary Search Meets Reinforcement LearningAnja Surina, Amin Mansouri, Lars Quaedvlieg, Amal Seddas, Maryna Viazovska, and 2 more authorsarXiv preprint arXiv:2504.05108, Apr 2025

Algorithm Discovery With LLMs: Evolutionary Search Meets Reinforcement LearningAnja Surina, Amin Mansouri, Lars Quaedvlieg, Amal Seddas, Maryna Viazovska, and 2 more authorsarXiv preprint arXiv:2504.05108, Apr 2025Discovering efficient algorithms for solving complex problems has been an outstanding challenge in mathematics and computer science, requiring substantial human expertise over the years. Recent advancements in evolutionary search with large language models (LLMs) have shown promise in accelerating the discovery of algorithms across various domains, particularly in mathematics and optimization. However, existing approaches treat the LLM as a static generator, missing the opportunity to update the model with the signal obtained from evolutionary exploration. In this work, we propose to augment LLM-based evolutionary search by continuously refining the search operator - the LLM - through reinforcement learning (RL) fine-tuning. Our method leverages evolutionary search as an exploration strategy to discover improved algorithms, while RL optimizes the LLM policy based on these discoveries. Our experiments on three combinatorial optimization tasks - bin packing, traveling salesman, and the flatpack problem - show that combining RL and evolutionary search improves discovery efficiency of improved algorithms, showcasing the potential of RL-enhanced evolutionary strategies to assist computer scientists and mathematicians for more efficient algorithm design.

-

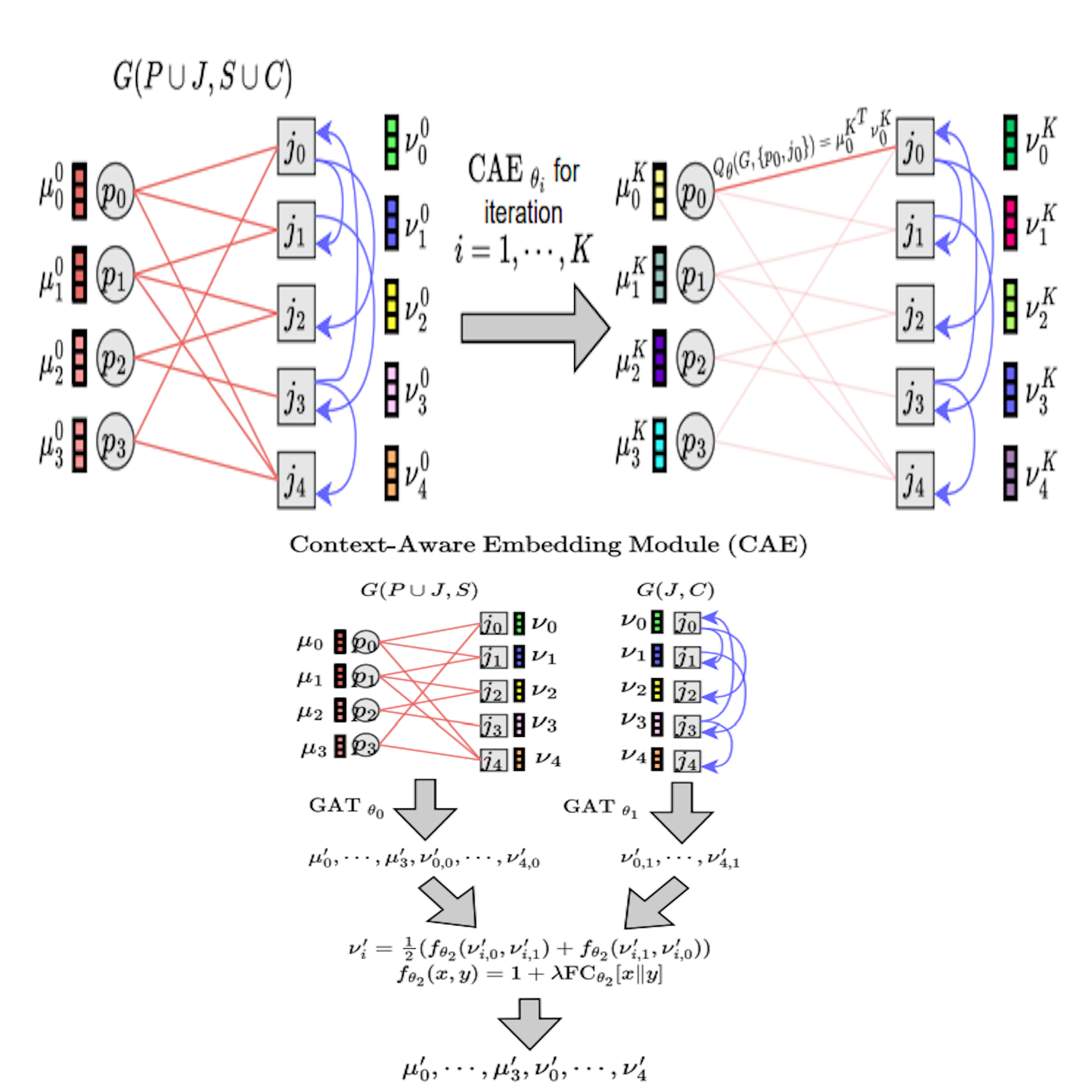

Optimizing Job Allocation using Reinforcement Learning with Graph Neural NetworksLars QuaedvliegarXiv preprint arXiv:2501.19063, Jan 2025

Optimizing Job Allocation using Reinforcement Learning with Graph Neural NetworksLars QuaedvliegarXiv preprint arXiv:2501.19063, Jan 2025Efficient job allocation in complex scheduling problems poses significant challenges in real-world applications. In this report, we propose a novel approach that leverages the power of Reinforcement Learning (RL) and Graph Neural Networks (GNNs) to tackle the Job Allocation Problem (JAP). The JAP involves allocating a maximum set of jobs to available resources while considering several constraints. Our approach enables learning of adaptive policies through trial-and-error interactions with the environment while exploiting the graph-structured data of the problem. By leveraging RL, we eliminate the need for manual annotation, a major bottleneck in supervised learning approaches. Experimental evaluations on synthetic and real-world data demonstrate the effectiveness and generalizability of our proposed approach, outperforming baseline algorithms and showcasing its potential for optimizing job allocation in complex scheduling problems.

2024

- RLC

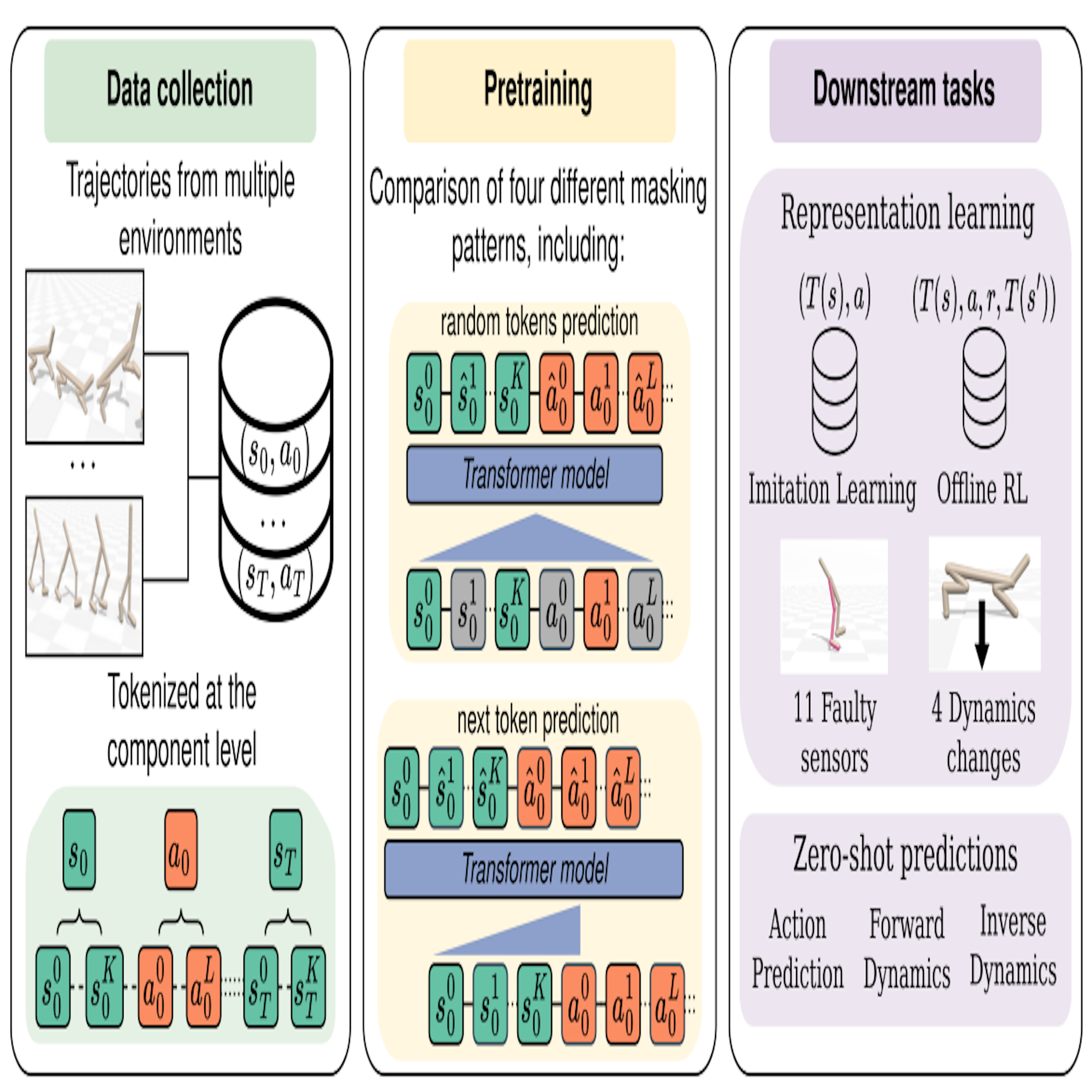

PASTA: Pretrained Action-State Transformer AgentsRaphael Boige*, Yannis Flet-Berliac*, Lars Quaedvlieg, Arthur Flajolet, Guillaume Richard, and 1 more authorReinforcement Learning Journal,, Aug 2024

PASTA: Pretrained Action-State Transformer AgentsRaphael Boige*, Yannis Flet-Berliac*, Lars Quaedvlieg, Arthur Flajolet, Guillaume Richard, and 1 more authorReinforcement Learning Journal,, Aug 2024Self-supervised learning has brought about a revolutionary paradigm shift in various computing domains, including NLP, vision, and biology. Recent approaches involve pre-training transformer models on vast amounts of unlabeled data, serving as a starting point for efficiently solving downstream tasks. In reinforcement learning, researchers have recently adapted these approaches, developing models pre-trained on expert trajectories. This advancement enables the models to tackle a broad spectrum of tasks, ranging from robotics to recommendation systems. However, existing methods mostly rely on intricate pre-training objectives tailored to specific downstream applications. This paper conducts a comprehensive investigation of models, referred to as pre-trained action-state transformer agents (PASTA). Our study covers a unified methodology and covers an extensive set of general downstream tasks including behavioral cloning, offline RL, sensor failure robustness, and dynamics change adaptation. Our objective is to systematically compare various design choices and offer valuable insights that will aid practitioners in developing robust models. Key highlights of our study include tokenization at the component level for actions and states, the use of fundamental pre-training objectives such as next token prediction or masked language modeling, simultaneous training of models across multiple domains, and the application of various fine-tuning strategies. In this study, the developed models contain fewer than 7 million parameters allowing a broad community to use these models and reproduce our experiments. We hope that this study will encourage further research into the use of transformers with first principle design choices to represent RL trajectories and contribute to robust policy learning.

2023

- NeurIPS

Maximum Independent Set: Self-Training through Dynamic ProgrammingLars Quaedvlieg*, L. Brusca*, S. Skoulakis, G. Chrysos, and V. CevherAdvances in Neural Information Processing Systems (NeurIPS),, Jul 2023

Maximum Independent Set: Self-Training through Dynamic ProgrammingLars Quaedvlieg*, L. Brusca*, S. Skoulakis, G. Chrysos, and V. CevherAdvances in Neural Information Processing Systems (NeurIPS),, Jul 2023This work presents a novel graph neural network (GNN) framework for solving the maximum independent set (MIS) inspired by dynamic programming (DP). Specifically, given a graph, we propose a DP-like recursive algorithm based on GNNs that firstly constructs two smaller sub-graphs, predicts the one with the larger MIS, and then uses it in the next recursive call. To train our algorithm, we require annotated comparisons of different graphs concerning their MIS size. Annotating the comparisons with the output of our algorithm leads to a self-training process that results in more accurate self-annotation of the comparisons and vice versa. We provide numerical evidence showing the superiority of our method vs prior methods in multiple synthetic and real-world datasets.

2022

-

Multi-Agent Reinforcement Learning with Graph Neural Networks for Online Multi-Hoist SchedulingLars QuaedvliegBachelor’s Thesis,, Jul 2022

Multi-Agent Reinforcement Learning with Graph Neural Networks for Online Multi-Hoist SchedulingLars QuaedvliegBachelor’s Thesis,, Jul 2022This thesis explores an approach to solving the online multi-hoist scheduling problem by combining graph neural networks and multi-agent reinforcement learning. It approaches the problem by creating two sets of agents: source agents and hoists. When requested, a source agent selects a job from a queue of jobs in a source station to give to hoists. The hoists are responsible for picking up and dropping off jobs at stations, and coordinate with each other to avoid scenarios that would result in a deadlock. The devised algorithms are trained and benchmarked against other approaches, such as random and heuristic algorithms. Further insights into the methods are obtained from an analysis using dimensionality reduction on neural activations of the environment states. The results indicate that deadlocks are avoided in all experimental results. Furthermore, the approach in this thesis outperforms the other approaches in the benchmark by 7.50% to 10%. By analyzing the neural activations, it is shown that the hoist agents estimate that situations with many jobs being processed yield a higher job throughput than situations with less. Further research into the overall approach is recommended, but the results show potential to perform well against other approaches in existing literature.