CS-330: Deep Multi-Task and Meta Learning - Introduction

I have been incredibly interested in the recent wave of multimodal foundation models, especially in robotics and sequential decision-making. Since I never had a formal introduction to this topic, I decided to audit the Deep Multi-Task and Meta Learning course, which is taught yearly by Chelsea Finn at Stanford. I will mainly document my takes on the lectures, hopefully making it a nice read for people who would like to learn more about this topic!

Introduction

The course CS 330: Deep Multi-Task and Meta Learning, by Chelsea Finn, is taught on a yearly basis and discusses the foundations and current state of multi-task learning and meta learning.

![]() Note: I am discussing the content of the edition in Fall 2023, which no longer includes reinforcement learning. If you are interested in this, I will be auditing CS 224R Deep Reinforcement Learning later this spring, which is also taught by Chelsea Finn.

Note: I am discussing the content of the edition in Fall 2023, which no longer includes reinforcement learning. If you are interested in this, I will be auditing CS 224R Deep Reinforcement Learning later this spring, which is also taught by Chelsea Finn.

In an attempt to improve my writing skills and provide useful summaries/voice my opinions, I have decided to discuss the content of every lecture in this blog. In this post, I will give an overview of the course and why it is important for AI, especially now.

This course will focus on solving problems that are composed of multiple tasks, and studies how structure that arises from these multiple tasks can be leveraged to learn more efficiently/effectively, including:

- Self-supervised pre-training for downstream few-shot learning and transfer learning.

- Meta-learning methods that aim to learn efficient learning algorithms that can learn new tasks quickly.

- Curriculum and lifelong learning, where the problem requires learning a sequence of tasks, leveraging their shared structure to enable knowledge transfer.

Lectures

The lecture schedule of the course is as follows:

- Multi-task learning

- Transfer learning & meta learning

- Black-box meta-learning & in-context learning

- Optimization-based meta-learning

- Few-shot learning via metric learning

- Unsupervised pre-training for few-shot learning (contrastive)

- Unsupervised pre-training for few-shot learning (generative)

- Advanced meta-learning topics (task construction)

- Variational inference

- Bayesian meta-learning

- Advanced meta-learning topics (large-scale meta-optimization)

- Lifelong learning

- Domain Adaptation and Domain Generalization

- Frontiers & Open Challenges

I am excited to start discussing these topics in greater detail! Check this page regularly for updates, since I will link to new posts whenever they are available!

Why multi-task and meta-learning?

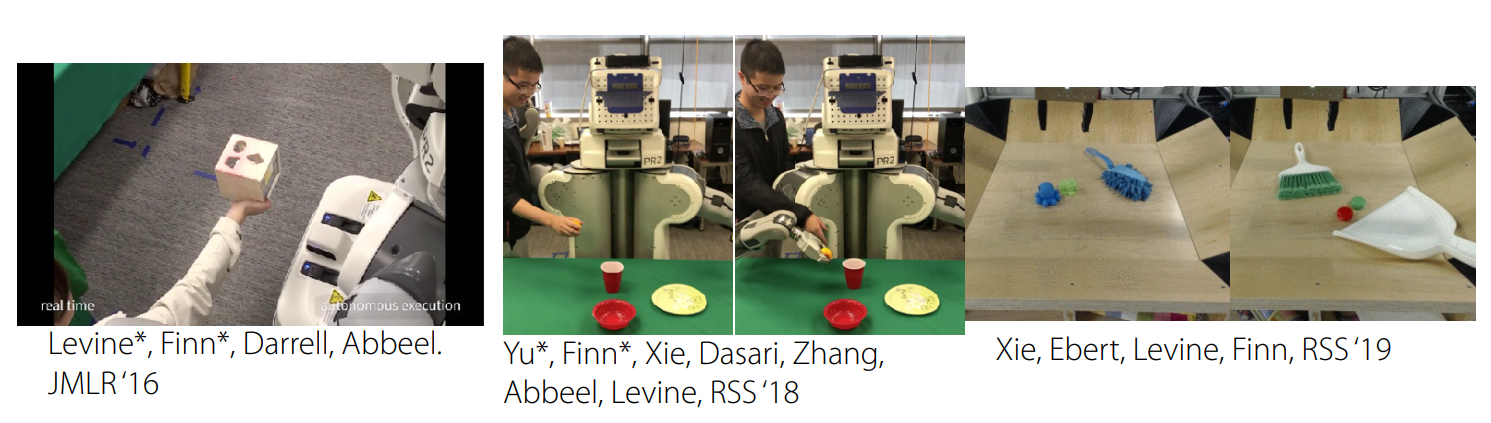

Robots are embodied in the real world, and must generalize across tasks. In order to do so, they need some common sense understanding and supervision can’t be taken for granted.

Earlier robotics and reinforcement research mainly focused on problems that required learning a task from scratch. This problem is even present in other fields, such as object detection or speech recognition. However, as opposed to these problems, humans are generalists that exploit common structures to solve new problems more efficiently.

Going beyond the case of generalist agents, deep multi-task and meta learning useful for any problems where a common structure can benefit the efficiency or effectiveness of a model. It can be impractical to develop models for each specific task (e.g. each robot, person, or disease), especially if the data that you have access to for these individual tasks is scarce.

If you need to quickly learn something new, you need to utilize prior experiences (e.g. few-shot learning) to make decisions.

But why now? Right now, with the speed of research advancements in AI, many researchers are looking into utilizing multi-model information to develop their models. Especially in robotics, foundation models seem the topic in 2024, and many advancements have been made in the past year

What are tasks?

Given a dataset $\mathcal{D}$ and loss function $\mathcal{L}$, we hope to develop a model $f_\theta$. Different tasks can be used to train this model, with some simple examples being objects, people, objectives, lighting conditions, words, languages, etc.

The critical assumption here is that different tasks must share some common structure. However, in practice, this is very often the case, even for tasks that seem unrelated. For example the laws of physics and the rules of English can be shared among many tasks.

- The multi-task problem: Learn a set of tasks more quickly or more proficiently than learning them independently.

- Given data on previous task(s), learn a new task more quickly and/or more proficiently.

Doesn’t multi-task learning reduce to single-task learning?

This is indeed the case when aggregating data across multiple tasks, which is actually one approach to multi-task learning. However, what if you want to learn new tasks? And how do you tell the model which task to do? And what if aggregating doesn’t work?